What kind of monster am I?

MIT's Moral Machines quiz exposed my grotesque form. In the survey, I determined the path of a self-driving car that suffers brake failure. In each scenario, I decided whether to send the car careening into a barrier or to barrel through pedestrian traffic.

Luckily, I prepared for this possibility.

While I could have treated this as a purely ethical exercise, instead I imposed four strict guidelines with the goal of ensuring consumer adoption of autonomous vehicles. The prime directive: save the passengers. I applied the following ruleset to each scenario, in order of evaluation:

- Animals are not humans

- Save the passengers

- Follow traffic rules

- Do not swerve

The rationale: You may find my first rule the most monstrous, but it is a necessary condition for all subsequent rules: animals do not count as passengers or pedestrians. That means that a car full of animals is treated as an empty car, and a crosswalk full of animals is treated as an empty crosswalk. With apologies to the ASPCA, dogs (good dogs) would happily sacrifice themselves for their best friends. And cats, well, cats already treat us like empty space.

Next, the critical mandate: save the passengers. We do not assess number or type of passengers vs. pedestrians that will be endangered by swerving. We will save one criminal passenger when that requires plowing through five children walking to school.

Third, assuming passengers are safe, we follow traffic rules. This means that given the choice between driving through a green light and driving through a red light, we always drive through the green. We assume that pedestrians are less likely to cross against traffic. This rule will become a sort of self-fulfilling prophecy, though the citizens of Manhattan will be stressed out for a while. Coincidentally, the introduction of the automobile a century ago followed a similarly lethal pattern until pedestrians smartened up.

Finally, if we can save the passengers and follow traffic rules, we opt not to swerve. The intention here is that autonomous driving should be as predictable as possible. When we see a car accelerating toward us, we should assume that it will follow its current path. This means that in some cases, a larger number of pedestrians will be struck only for the unhappy accident of legally crossing at the wrong moment. This is terrible and unfair, though the number of victims will be dwarfed by the number of people saved from accidents due to human error.

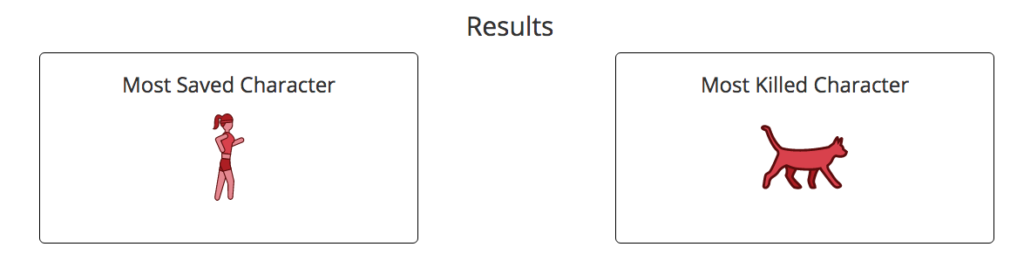

So what kind of monster am I? When these rules are implemented across scenarios, what sort of trends do we see?

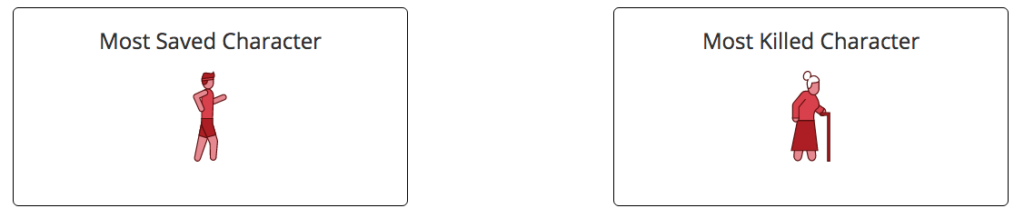

Hm. I disgust me. Clearly, I am both sexist and ageist.

Hm. I disgust me. Clearly, I am both sexist and ageist.

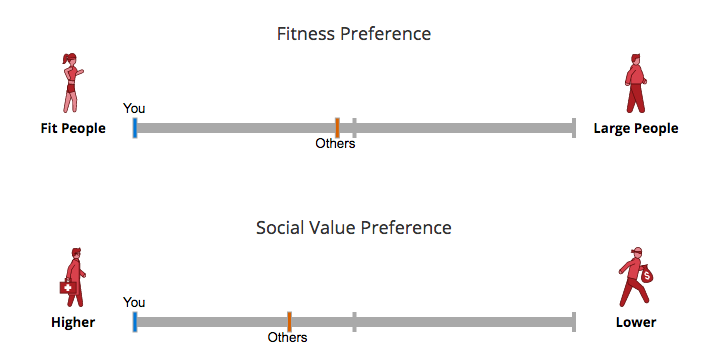

Moreover, we learn about my social and physical preferences:  Awful. Just awful.

Awful. Just awful.

But I wanted to know more. I wanted to know how consistently wretched I was, so I took the quiz a second time, using the same set of rules. What kind of monster am I?

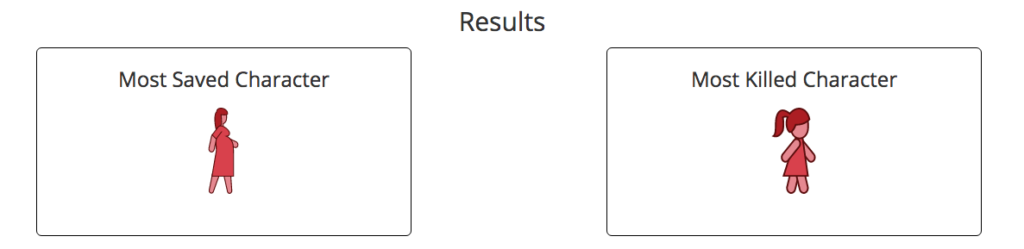

Oh god. Is this worse? It's worse, isn't it? What else do we see?

Oh god. Is this worse? It's worse, isn't it? What else do we see?

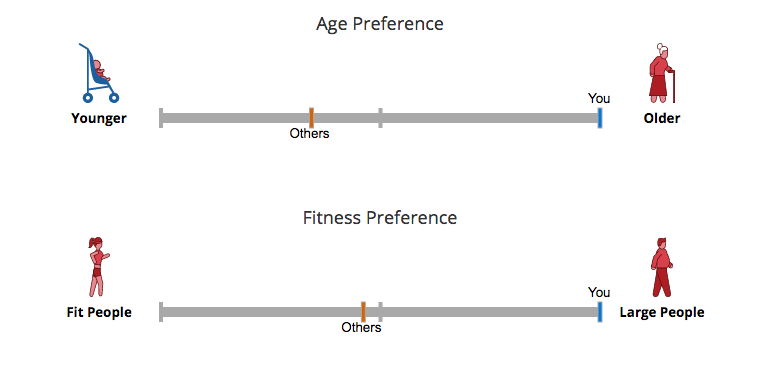

Huh. That's strange. In the first quiz I prioritized the safety of younger, fitter people. This time, they were dispensable. Confused, I took the quiz a third time. Let's settle this, what kind of monster am I?

Well, that makes a bit more sense.

Over the course of a half dozen attempts, I was biased against criminals, athletic people, women, men, large people, babies, and the elderly. I implemented a ruleset that disregarded everyone's identity, but given a limited sample size, any constituency could take me to court for discrimination.

This is the real dilemma for the trolley scenario and autonomous cars. Given indifferent rules, we will see bias. Given toast, we will see a face.

On a long enough timeline, we will be monsters to everyone.